On the inherent uncertainty of project tasks estimates

The accuracy of a project schedule depends on the accuracy of the individual activity (or task) duration estimates that go into it. Project managers know this from (often bitter) experience. Treatises such as the gospel according to PMBOK recognise this, and exhort project managers to estimate uncertainties and include them when reporting activity durations. However, the same books have little to say on how these uncertainties should be integrated into the project schedule in a meaningful way. Sure, well-established techniques such as PERT do incorporate probabilities into schedules via averaged or expected durations. But the resulting schedules are always treated as deterministic, with each task (and hence, the project) having a definite completion date. Schedules rarely, if ever, make explicit allowance for uncertainties.

In this post I look into the nature of uncertainty in project tasks – in particular I focus on the probability distribution of task durations. My approach is intuitive and somewhat naive. Having said that up front, I trust purists and pedants will bear with my somewhat loose use of terminology relating to probability theory.

Theory is good for theorists; practitioners prefer examples, so I’ll start with one. Consider an activity that you do regularly – such as getting ready in the morning. Since you’ve done it so often, you have a pretty good idea how long it takes on average. Say it takes you an hour on average – from when you get out of bed to when you walk out of your front door. Clearly, on a particular day you could be super-quick and finish in 45 minutes, or even 40 minutes. However, there’s a lower limit to the early finish – you can’t get ready in 0 minutes! Let’s say the lower limit is 30 minutes. On the other hand, there’s really no upper limit. On a bad day you could take a few hours. Or if you slip in the shower and hurt your back, you could take a few days! So, in terms of probabilities, we have a 0% probability at a lower limit and also at infinity (since the probability of taking an infinite time to get to work is essentially zero). In between we’d expect the probability to hit a maximum at a lowish value of time (may be 50 minutes or so). Beyond the maximum, the probability would decay rapidly at first, then slowly becoming zero at an infinite time.

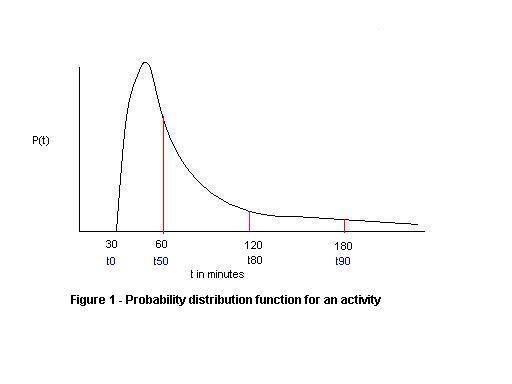

If we were to plot the probability of activity completion for this example as a function of time, it would look like the long-tailed function I’ve depicted in Figure 1 below. The distribution starts at a non-zero cutoff (corresponding to the minimum time for the activity); increases to a maximum (corresponding to the most probable time); and then falls off rapidly at first, then with a long, slowly decaying tail. The mean (or average) of the distribution is located to the right of the maximum because of the long tail. In the example, (30 mins) is the minimum time for completion so the probability of finishing within 30 mins is 0%. There’s a 50% probability of completion within an hour (denoted by

), 80% probability of completion within 2 hours (denoted by

) and a 90% probability of completion in 3 hours (denoted by

). The large values for

and

compared to

are a consequence of the long tail. In the example, the tail – which goes all the way to infinity – accounts for the remote possibility you may slip in the shower, hurt yourself badly, and make it work very late (or may be not at all!).

It turns out that many phenomena can be modeled by this kind of long-tailed distribution. Some of the better known long-tailed distributions include lognormal and power law distributions. A quick, informal review of project management literature revealed that lognormal distributions are more commonly used than power laws to model activity duration uncertainties. This may be because lognormal distributions have a finite mean and variance whereas power law distributions can have infinite values for both (see this presentation by Michael Mitzenmacher, for example). [An Aside:If you’re curious as to why infinities are possible in the latter, it is because power laws decay more slowly than lognormal distributions – i.e they have “fatter” tails, and hence enclose larger (even infinite) areas.]. In any case, regardless of the exact form of the distribution for activity durations, what’s important and non-controversial is the short cutoff, the peak and long, decaying tail. These characteristics are true of all probability distributions that describe activity durations.

There’s one immediate consequence of the long tail: if you want to be really, really sure of completing any activity, you have to add a lot of “air” or safety because there’s a chance that you may “slip in the shower” so to speak. Hence, many activity estimators add large buffers to their estimates. Project managers who suffer the consequences of the resulting inaccurate schedule are thus victims of the tail.

Very few methodologies explicitly acknowledge uncertainty in activity estimates, let alone present ways to deal with it. Those that do include The Critical Chain Method, Monte Carlo Simulation and Evidence Based Scheduling. The Critical Chain technique deals with uncertainty by slashing estimates to their values and consolidating safety or “air” into a single buffer, whereas the latter two techniques use simulations to generate expected durations (at appropriate confidence levels). It would take me way past my self-imposed word limit to discuss these any further, but I urge you to follow the links listed above if you want to find out more.

(Note: Portions of this post are based on my article on the Critical Chain Method)

Hi, Kailash —

Great post! I hate for this comment to come across as promotional, but I felt compelled to bring to your attention the fact that one tool out there (ok, it happens to be made by the company I work for, LiquidPlanner) actually allows you to incorporate uncertainty into your project schedules in the form of ranged estimates. We actually use standard distributions (for the reasons behind that, I’d have to refer you to Bruce Henry, our Director of Rocket Science and the brain behind the scheduling engine). We find them to be far more valuable than single-point estimates which are almost always generously padded and give no real information — especially at the project level — of the probability of finishing the task or project by a certain date.

LikeLike

Liz Pearce

March 8, 2008 at 9:51 am

Liz,

I’m curious as to what distributions your product uses to model task duration probabilities. I couldn’t find any information on this in the Liquid Planner site.

A Normal (or for that matter any symmetric) distribution isn’t appropriate for modelling duration probabilities.

Regards,

Kailash.

LikeLike

k

March 8, 2008 at 4:00 pm

I’d love to, but I’m afraid I’m not fully qualified to go into detail on the math. I’ve asked Bruce (the person I mentioned in my original comment) to explain more… I think he’ll chime in here before too long.

LikeLike

Liz Pearce

March 9, 2008 at 9:53 am

Sorry for the double comment but I horked the HTML in the first one and there was no preview to catch it or a way to edit it. 😦

Okay, since we’ve opened the Pandora’s box of statistics let’s go all in…

From Wikipedia:

The central limit theorem (CLT) states that if the sum of independent identically distributed random variables has a finite variance, then it will be approximately normally distributed (i.e., following a Gaussian distribution, or bell-shaped curve). Formally, a central limit theorem is any of a set of weak-convergence results in probability theory. They all express the fact that any sum of many independent and identically-distributed random variables will tend to be distributed according to a particular “attractor distribution”.

Yeah, okay… blah, blah, blah.

The end result is that by the time you’ve broken tasks down into the 1-2 day range (the way most project managers would recommend in a WBS) yu end up with what are for all intents and purposes “identically-distributed random variables” whose total time to complete is a simple sum. Therefore they tend towards a normal distribution anyway. And unless you’re really good at estimating (i.e have small variances in your estimates) your error in estimation will be far less than the error introduced by the approximation of using a Gaussian distribution from the get-go.

The Gaussian distribution has an additional computational advantage that you can perform the calculations for thousands and thousands of tasks assigned to multiple people in near real-time as the estimates and uncertainties and dependencies between tasks are all updates by multiple users. So that’s what we’re doing with LiquidPlanner.

Try doing that with Monte Carlo simulations. 🙂

While you’re absolutely correct that theoretically symmetric distributions are completely inadequate for modeling the duration probability for any one task, they turn out to be quite a good approximation in practice when you have long strings of successive tasks.

If you have other questions feel free to email me @liquidplanner.com

Very good writeup BTW. I’m a big fan of critical chain and Goldratt’s work in general.

LikeLike

Bruce P. Henry

March 15, 2008 at 11:58 am

Bruce,

Many thanks for your detailed comments.

As far as I’m aware, the Central Limit Theorem (CLT) applies only if the random variables involved are independent and identically distributed. Both these assumptions are somewhat moot for project tasks.

Firstly, project tasks most often involve dependencies, which brings into question the assumption of independence. Secondly, although mean durations may be similar (as per your discussion), the shapes of the individual distributions (which are long tailed) can be wildly different. Obviously, these points are hard to address in any general way since they vary considerably from project to project. Are you aware of any references relating to the applicability of CLT in the context of project scheduling? Please let me know if you are. I did a search for this a while back, but couldn’t find any in the public domain. However, I’m sure some academic somewhere has looked into it…

Anyway, in the absence of any evidence (or justification) either way, you could make a case for using the CLT as you have done. Furthermore, the fact that it works in practice in your product (as I’m sure it does!) is in itself a sort of post-hoc justification. In the end, though, regardless of whether or not a Gaussian distribution is applicable at the level of several tasks, individual task durations remain stubbornly non-symmetric.

Regards,

Kailash.

LikeLike

k

March 15, 2008 at 4:08 pm

K,

you are correct. I know of no credible project where the tasks are independent. Otherwise why is it a project and not just a list of “to dos”

There are several sources on this showing moderate to large unfavorable biases in forecasting from this assumption.

LikeLike

Glen B Alleman

April 16, 2010 at 1:01 am

[…] (Note: Portions of this section have been published previously in my post on the inherent uncertainty of project task estimates) […]

LikeLike

An introduction to the critical chain method « Eight to Late

August 21, 2009 at 1:56 pm

[…] an essay on the uncertainty of project task estimates, I described how a task estimate corresponds to a probability distribution. Put simply, […]

LikeLike

An introduction to Monte Carlo simulation of project tasks « Eight to Late

September 11, 2009 at 11:05 pm

[…] The Normal distribution (or bell curve) is not always appropriate. For example, see my posts on the inherent uncertainty of project task estimates for an intuitive discussion of the form of the probability distribution for project task […]

LikeLike

The failure of risk management: a book review « Eight to Late

February 11, 2010 at 10:12 pm

[…] than a single number. The broadness of the shape is a measure of the degree of uncertainty. See my post on the inherent uncertainty of project task estimates for an intuitive discussion of how a task estimate is a shape rather than a […]

LikeLike

The Flaw of Averages – a book review « Eight to Late

May 4, 2010 at 11:06 pm

[…] probabilities when we are uncertain about outcomes. As an example from project management, the uncertainty in the duration of a project task can be modeled using a probability distribution. In this case the probability distribution is a characterization of our uncertainty regarding how […]

LikeLike

On the origin of power laws in organizational phenomena « Eight to Late

July 29, 2010 at 6:00 am

[…] developing duration estimates for a project task, it is useful to make a distinction between uncertainty inherent in the task and uncertainty due to known risks. The former is uncertainty due to factors that are not known […]

LikeLike

Monte Carlo simulation of risk and uncertainty in project tasks | Eight to Late

February 17, 2011 at 9:56 pm

[…] [Aside: you may have noticed that all the distributions shown above are skewed to the right – that is they have a long tail. This is a general feature of distributions that describe time (or cost) of project tasks. It would take me too far afield to discuss why this is so, but if you’re interested you may want to check out my post on the inherent uncertainty of project task estimates. […]

LikeLike

A gentle introduction to Monte Carlo simulation for project managers | Eight to Late

March 27, 2018 at 4:11 pm