Archive for the ‘Organizations’ Category

The ethics and aesthetics of change

I recently attended a conference focused on Agile and systems-based approaches to tackling organizational problems. As one might expect at such a conference, many of the talks were about change – how to approach it, make a case for it, lead it etc. The thing that struck me most when listening to the speakers was how little the discourse on change has changed in the 25 odd years I have been in the business.

This is not the fault of the speakers. They were passionate, articulate and speaking from their own experiences at the coalface of change. Yet there was something missing.

What is lacking is a broad paradigm within which people can make sense of what is going on in their organisations and craft appropriate interventions by and for themselves, instead of imposing “change models” advocated by academics or consultants who have no skin in the game.

–x–

Much of the discourse on organizational change focuses on processes: do this in order to achieve that. To be sure, these approaches also mention the importance of the more intangible personal attitudes such as the awareness and desire for change. However, these too are considered achievable by deliberate actions of those who are “driving” change: do this to create awareness, do that to make people want change and so on.

The approach is entirely instrumental, even when it pretends not to be: people are treated as cogs in a large organizational wheel, to be cajoled, coaxed or coerced to change. This does not work. The inconvenient fact is that you cannot get people to change by telling them to change. Instead, you must reframe the situation in a way that enables them to perceive it in a different light.

They might then choose to change of their own accord.

–x–

Some years ago, I was part of a team that was asked to improve data literacy across an organisation.

Now, what is the first thing that comes to mind when you hear the term “data literacy“?

Chances are you think of it as a skill acquired through training. This is a natural reaction. We are conditioned to think of literacy as skill a acquired through programmed learning which, most often, happens in the context of a class or training program.

In the introduction to his classic Lectures on Physics, Richard Feynman (quoting Edward Gibbons) noted that, “the power of instruction is seldom of much efficacy except in those happy dispositions where it is almost superfluous.” This being so, wouldn’t it be better to use indirect approaches to learning? An example of such an approach is to “sneak” learning opportunities into day-to-day work rather than requiring people to “learn” in the artificial environment of a classroom. This would present employees multiple, even daily, opportunities to learn thus increasing their choices about how and when they learn.

We thought it worth a try.

–x–

It was clear to us that data literacy did not mean the same thing for a frontline employee as it did for a senior manager. Our first action, therefore, was to talk to the people across the organization who we knew worked with data in some way. We asked them questions on what data they used and how they used it. Most importantly we asked them to tell us about the one issue that “kept them up at night” and that they wished they had data about.

Unsurprisingly, the answers differed widely, depending on roles and positions in the organizational hierarchy. Managers wanted reports on performance, year on year trends etc. Frontline staff wanted feedback from customers and suggestions for improvement. There was a wide appreciation of what data could do for them, but an equally wide frustration that the data that was collected through surveys and systems tended to disappear into a data “black hole” never to be seen again or worse, presented in a way that was uninformative.

To tackle the issue, we took the first steps towards making data available to staff in ways that they could use. We did this by integrating customers, sales and demographic data along with survey data that collected feedback from customers and presenting it back to different user groups in ways that they could use immediately (what did customers think of the event? What worked? What didn’t?). We paid particular attention to addressing – if only partially – their responses to the “what keeps you up at night?” question.

A couple of years down the line, we could point to a clear uplift in data usage for decision making across the organization.

–x–

One of the phrases that keeps coming up in change management discussions is the need to “create an environment in which people can change.”

But what exactly does that mean?

How does a middle manager (with a fancy title that overstates the actual authority of the role) create an environment that facilitates change, within a larger system that is hostile to it? Indeed, the biggest obstacles to change are often senior managers who fear loss of control and therefore insist on tightly scripted change management approaches that limit the autonomy of frontline managers to improvise as needed.

Highly paid consultants and high-ranking executives are precisely the wrong people to be micro- scripting changes. If it is the frontline that needs to change, then that is where the story should be scripted.

People’s innate desire for a better working environment presents a fulcrum that is invariably overlooked. This is a shame because it is a point that can be leveraged to great advantage. To find the fulcrum, though, requires change agents to see what is happening on the ground instead of looking for things that confirm preconceived notions of management or fit prescriptions of methodologies.

Once one sees what is going on and acts upon it in a principled way, change comes for free.

–x–

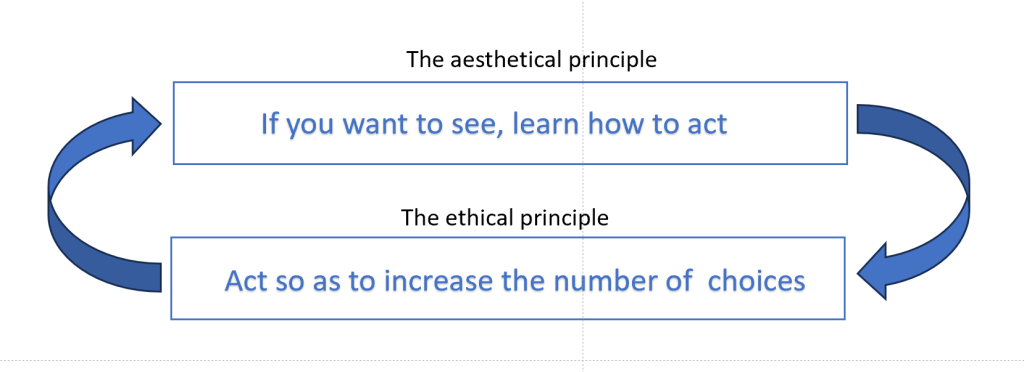

In his wonderful collection of essays on cognition, Heinz von Foerster, articulates two principles that ought to be the bedrock of all change efforts:

The Aesthetical Principle: If you want to see, learn how to act.

The Ethical Principle: Act always to increase choices.

The two are recursively connected as shown in Figure 1: to see, you must act appropriately; if you act appropriately, you will create new choices; the new choices (when enacted) will change the situation, and so you must see again…and so on. Recursively.

This paradigm puts a new spin on the tired old cliche about change being the only constant. Yes, change is unending, the world is Heraclitean, not Parmenidean. Yet, we have more power to shape it than we think, regardless of where we may be in the hierarchy. I first came across these principles over a decade ago and have used them as a guiding light in many of the change initiatives I have been involved in since.

Gregory Bateson once noted that, “what we lack is a theory of action in which the actor is part of the system.” Having used these principles for over a decade, I believe that an approach based on them could be a first step towards such a theory.

–x–

Von Foerster’s ethical and aesthetical imperatives are inspired by the philosophy of Ludwig Wittgenstein, who made the following statements in his best known book, Tractatus Logico-Philosophicus:

- It is clear that ethics cannot be articulated: meaning that ethical behaviour manifests itself through actions not words. Increasing choices for everyone affected by a change is an example of ethical action.

- Ethics and aesthetics are one: meaning that ethical actions have aesthetical outcomes– i.e., outcomes that are beautiful (in the sense of being harmonious with the wider system – an organization in this case)

By their very nature ethical and aesthetical principles do not prescribe specific actions. Instead, they exhort us to truly see what is happening and then devise actions – preferably non-intrusive and oblique – that might enable beneficial outcomes to occur. Doing this requires a sense of place and belonging, one that David Snowden captures beautifully in this essay.

–x–

Perhaps you remain unconvinced by my words. I can understand that. Unfortunately, I cannot offer scientific evidence of the efficacy of this approach; there is no proof and I do not think there will ever be. The approach works by altering conditions, not people, and conditions are hard to pin down. This being so, the effects of the changes are often wrongly attributed causes such as “inspiring leadership” or “highly motivated teams” etc. These are but labels that are used to dodge the question of what makes leadership inspiring or motivating.

A final word.

What is it that makes a work of art captivating? Critics may come up with various explanations, but ultimately its beauty is impossible to describe in words. For much the same reason, art teachers can teach techniques used by great artists, but they cannot teach their students how to create masterpieces.

You cannot paint a masterpiece by numbers but you can work towards creating one, a painting at a time.

The same is true of ethical and aesthetical approaches to change.

–x–x–

Note: The approach described here underpins Emergent Design, an evolutionary approach to sociotechnical change. See this piece for an introduction to Emergent Design and this book for details on how to apply it in the context of building modern data capabilities in organisations (use the code AFL03 for a 20% discount off the list price)

What is Emergent Design?

Last week I had the opportunity to talk to a data science team about the problems associated with building a modern data capability in a corporate environment. The organisation’s Head of Data was particularly keen to hear about how the Emergent Design approach proposed in my recent book might help with some of the challenges they are facing. To help me frame my talk, he sent me a bunch of questions, which included the following two:

- Can you walk us through the basic steps of emergent design in data science?

- How does emergent design work with other data science methodologies, such as CRISP-DM or Agile?

On reading these questions, I realized I had a challenge on my hands: Emergent Design is about cultivating a mindset or disposition with which to approach problematic situations, rather than implementing a canned strategic framework or design methodology. Comparing it to other methodologies would be a category error – like comparing pizza to philosophy. (Incidentally, I think this is the same error that some (many?) Agile practitioners make when they mistake the rituals of Agile for the mindset required to do it right…but that’s another story.)

The thing is this: the initial task of strategy or design work is about a) understanding what ought to be done, considering the context of the organisation and b) constructing an appropriate approach to doing it. In other words, it is about framing the (strategic or any other) problem and then figuring out what to do about it, all the while keeping in mind the specific context of the organization. Emergent Design is a principled approach to doing this.

–x–

So, what is Emergent Design?

A good place to start is with the following passage from the PhD thesis of David Cavallo, the man who coined the term and developed the ideas behind it:

“The central thrust of this thesis is the presentation of a new strategy for educational intervention. The approach I describe here resembles that of architecture, not only in the diversity of the sources of knowledge it uses but in another aspect as well – the practice of letting the design emerge from an interaction with the client. The outcome is determined by the interplay between understanding the goals of the client; the expertise, experience, and aesthetics of the architect; and the environmental and situational constraints of the design space. Unlike architecture where the outcome is complete with the artifact, the design of [such initiatives] interventions is strengthened when it is applied iteratively. The basis for action and outcome is through the construction of understanding by the participants. I call this process Emergent Design.”

Applied to the context of building a data capability, Emergent Design involves:

- Having conversations across the organization to understand the kinds of problems people are grappling with. The problems may or may not involve data. The ones that do not involve data give you valuable information about the things people worry about. In general the conversations will cover a lot of territory and that is OK – the aim is to get a general feel for the issues that matter.

- Following the above, framing a handful – two or three – concrete problems that you can solve using skills and resources available on hand. These proof-of-concept projects will help you gain allies and supporters for your broader strategic efforts.

While doing the above, you will notice that people across the organization have wildly different perspectives on what needs to be done and how. Moreover, they will have varying perspectives on what technologies should be used. As a technology strategist, your key challenge is around how to reconcile and synthesise these varied viewpoints. This is what makes the problem of strategy a wicked problem. For more on the wicked elements of building data capabilities, check out the first chapter of my book which is available for free here.

Put simply then, Emergent Design is about:

- Determining a direction rather than constructing a roadmap

- Letting the immediate next steps be determined by what adds the greatest value (for your stakeholders)

With that said for the “what,” I will now say a few words about the “how.”

–x–

In the book we set out eight guidelines or principles for practicing Emergent Design. I describe them briefly below:

Be a midwife rather than an expert: In practical terms, this involves having conversations with key people in business units across the organisation, with the aim of understanding their pressing problems and how data might help in solving them (we elaborate on this at length in Chapter 4 of the book). The objective at this early stage is to find out what kind of data science function your organisation needs. Rather than deep expertise in data science, this requires an ability to listen to experts in other fields, and translate what they say into meaningful problems that can potentially be solved by data science. In other words, this requires the strategist to be a midwife rather than an expert.

Use conversations to gain commitment: In their ground- breaking book on computers and cognition, Winograd and Flores observed that “organisations are networks of commitments.” between people who comprise the organisation. It is through conversations that commitments between different groups of stakeholders are established and subsequently acted on. In Chapter 3 of the book, we offer some tips on how to have such conversations. The basic idea in the above is to encourage people to say what they really think, rather than what they think you want them to say. It is crucial to keep in mind that people may be unwilling to engage with you because they do not understand the implications of the proposed changes and are fearful of what it might mean for them.

Understand and address concerns of stakeholders who are wary of the proposed change: In our book, The Heretic’s Guide to Management, Paul Culmsee and I offer advice on how to do this in specific contexts using what we call “management teddy bears”. These involve offering reassurance, advice, or opportunities that reduce anxiety, very much akin to how one might calm anxious children by offering them teddy bears or security blankets. Here are a few examples of such teddy bears:

- A common fear that people have is that the new capability might reduce the importance of their current roles. A good way to handle this is to offer these people a clear and workable path to be a part of the change. For example, one could demonstrate how the new capability (a) enriches their current role or (b) offers opportunities to learn new skills or (c) enhances their effectiveness. We could call this the “co- opt teddy bear”. In Chapter 7 of our data science strategy book, we offer concrete ways to involve the business in data science projects in ways that makes the projects theirs.

- It may also happen that some stakeholder groups are opposed to the change for political reasons. In this case, one can buy time by playing down the significance of the new capability. For example, one could frame the initiative as a “pilot” project run by the current data and reporting function. We could call this the “pilot teddy bear.” See the case study towards the end of Chapter 3 of the data science strategy book for an example of a situation in which I used this teddy bear.

Frame the current situation as an enabling constraint: In strategy development, it is usual to think of the current situation in negative terms, a situation that is undesirable and one that must be changed as soon as practicable. However, one can flip this around and look at the situation from the perspective of finding specific things that you can change with minimal political or financial cost. In other words, you reframe the current situation as an enabling constraint (see this paper by Kauffman and Garre for more on this). The current situation is well defined, but there are an infinite number of possible next steps. Although the actual next step cannot be predicted, one can make a good enough next step by thinking about the current situation creatively in order to explore what Kauffman calls the adjacent possible – the possible future states that are within reach, given the current state of the organisation (see this paper by Kauffman). You may have to test a few of the adjacent possible states before you figure out which one is the best. This is best done via small, safe- to- fail proof of concept projects (discussed at length in Chapter 4 of our data science strategy book).

Consider long- term and hidden consequences: It is a fact of life that when choosing between different approaches, people will tend to focus on short- term gains rather than long- term consequences. Indeed, one does not have to look far to see examples that have global implications (e.g., the financial crisis of 2008 and climate change). Valuing long- term results is difficult because the distant future is less salient than the present or the immediate future. A good way to look beyond immediate concerns (such as cost) is to use the “solution after next principle” proposed by Gerald Nadler and Shozo Hibino in their book entitled Breakthrough Thinking. The basic idea behind the principle is to get people to focus on the goals that lie beyond the immediate goal. The process of thinking about and articulating longer term goals can often provide insights into potential problems with the current goals and/ or how they are being achieved. We discuss this principle and another approach to surfacing hidden issues in Chapters 3 and 4 of the data science strategy book.

Create an environment that encourages learning: Emergent Design is a process of experimentation and learning. However, all learning other than that of the most trivial kind involves the possibility of error. So, for it to work, one needs to create an environment of psychological safety – i.e., an environment in which employees feel safe to take risks by trialling new ideas and processes, with the possibility of failure. A key feature of learning organisations is that when things go wrong, the focus is not on fixing blame but on fixing the underlying issue and, more importantly, learning from it so that one reduces the chances of recurrence. It is interesting to note that this focus on the system rather than the individual is also a feature of high reliability organisations such as emergency response agencies.

Beware of platitudinous goals: Strategies are often littered with buzzwords and platitudes – cliched phrases that sound impressive but are devoid of meaning. For example, two in- vogue platitudes at the time our book was being written are “digital transformation” and “artificial intelligence.” They are platitudes because they tell you little about what exactly they mean in the specific context of the organisation.

The best way to deconstruct a platitude is via an oblique approach that is best illustrated through an example. Say someone tells you that they want to implement “artificial intelligence” (or achieve a “digital transformation” or any other platitude!) in their organisation. How would you go about finding out what exactly they want? Asking them what they mean by “artificial intelligence” is not likely to be helpful because the answer you will get is likely to be couched in generalities such as data- driven decision making or automation, phrases that are world- class platitudes in their own right! Instead, it is better to ask them how artificial intelligence would make a difference to the organisation. This can help you steer the discussion towards a concrete business problem, thereby bringing the conversation down from platitude- land to concrete, measurable outcomes.

Act so as to increase your choices: This is perhaps the most important point in this list because it encapsulates all the other points. We have adapted it from Heinz von Foerster’s ethical imperative which states that one should always act so as to increase the number of choices in the future (see this lecture by von Foerster). Keeping this in mind as you design your data science strategy will help you avoid technology or intellectual lock in. As an example of the former, when you choose a product from a particular vendor, they will want you to use their offerings for the other components of your data stack. Designing each layer of the stack in a way that can work with other technologies ensures interoperability, an important feature of a robust data technology stack (discussed in detail in Chapter 6 of the strategy book). As an example of the latter, when hiring data scientists, hire not just for what they know now but also for evidence of their curiosity and proclivity to learn new things – a point we elaborate on in Chapter 5 of the data strategy book.

You might be wondering why von Foerster called this the “ethical imperative.” An important aspect of this principle is that your actions should not constrain the choices of others. Since the predictions of your analytical models could well affect the choice of others (e.g. whether or not they are approved for a loan or screened out for a job), you should also cast an ethical eye on your work. We discuss ethics and privacy at length in Chapter 8 of the data strategy book.

–x–

These principles do not tell you what to do in specific situations. Rather, they are about cultivating a disposition that looks at technology through the multiple, and often conflicting, perspectives of those whose work is affected by it. A technical capability is never purely technical; within the context of an organisation it becomes a sociotechnical capability.

In closing: Emergent Design encourages practitioners to recognise that building and embedding a data capability (or any other technical capability such as program evaluation) is a process of organisational change that is best achieved in an evolutionary manner. This entails starting from where people are – in terms of capability, culture, technology, and governance – and enabling them to undertake a collective journey in an agreed direction.

…and, yes, all the while keeping in mind that it is the journey that matters, not the destination.

Preface to “Data Science and Analytics Strategy: An Emergent Design Approach”

As we were close to completing the book (sample available here), Harvard Business Review published an article entitled, Is Data Scientist Still the Sexiest Job of the 21st Century? The article revisits a claim made a decade ago, in a similarly titled piece about the attractiveness of the profession.2 In the recent article, the authors note that although data science is now a well- established function in the business world, setting up the function presents a number of traps for the unwary. In particular, they identify the following challenges:

- The diverse skills required to do data science in an organisational setting.

- A rapidly evolving technology landscape.

- Issues around managing data science projects; in particular, productionising data science models – i.e., deploying them for ongoing use in business decision- making.

- Putting in place the organisational structures/ processes and cultivating individual dispositions to ensure that data science is done in an ethical manner.

On reviewing our nearly completed manuscript, we saw that we have spoken about each of these issues, in nearly the same order that they are discussed in the article (see the titles of Chapters 5– 8). It appears that the issues we identified as pivotal are indeed the ones that organisations face when setting up a new data science function. That said, the approach we advocate to tackle these challenges is somewhat unusual and therefore merits a prefatory explanation.

The approach proposed in this book arose from the professional experiences of two very different individuals, whose thoughts on how to “do data” in organisational settings converged via innumerable conversations over the last five years. Prior to working on this book, we collaborated on developing and teaching an introductory postgraduate data science course to diverse audiences ranging from data analysts and IT professionals to sociologists and journalists. At the same time, we led very different professional lives, working on assorted data- related roles in multinational enterprises, government, higher education, not- for- profit organisations and start- ups. The main lesson we learned from our teaching and professional experiences is that, when building data capabilities, it is necessary to first understand where people are – in terms of current knowledge, past experience, and future plans – and grow the capability from there.

To summarise our approach in a line: data capabilities should be grown, not grafted.

This is the central theme of Emergent Design, which we introduce in Chapter 1 and elaborate in Chapter 3. The rest of the book is about building a data science capability using this approach.

Naturally, we were keen to sense- check our thinking with others. To this end, we interviewed a number of well- established data leaders and practitioners from diverse domains, asking them about their approach to setting up and maintaining data science capabilities. You will find their quotes scattered liberally across the second half of this book. When speaking with these individuals, we found that most of them tend to favour an evolutionary approach not unlike the one we advocate in the book. To be sure, organisations need formal structures and processes in place to ensure consistency, but many of the data leaders we spoke with emphasised the need to grow these in a gradual manner, taking into account the specific context of their organisations.

It seems to us that many who are successful in building data science and analytics capabilities tacitly use an emergent design approach, or at least some elements of it. Yet, there is very little discussion about this approach in the professional and academic literature. This book is our attempt at bridging this gap.

Although primarily written for business managers and senior data professionals who are interested in establishing modern data capabilities in their organisations, we are also speaking to a wider audience ranging from data science and business students to data professionals who would like to step into management roles. Last but not least, we hope the book will appeal to curious business professionals who would like to develop a solid understanding of the various components of a modern data capability. That said, regardless of their backgrounds and interests, we hope readers will find this book useful … and dare we say, an enjoyable read.

Note:

You can buy the book from the Routledge website. If you do, please use the code AFL03 for a 20% discount (code valid until end 2023). Note that the discount has already been applied in some countries.