Archive for April 2009

IT does matter

Does IT matter? This question has been debated endlessly since Nicholas Carr published his influential article over five year ago. Carr argues that the ubiquity of information of information technology has diminished its strategic importance: in other words, since every organisation uses IT in much the same way, it no longer confers a competitive advantage. Business executives and decision-makers who are familiar with Carr’s work will find a readymade rationale for restructuring their IT departments, or even doing away with them altogether. If IT isn’t a strategic asset, why bother having an in-house IT department? Salaries, servers and software add up to a sizeable stack of dollars. To an executive watching costs, particularly in these troubled times, the argument to outsource IT is compelling. Compelling maybe, but misguided. In this post I explain why I think so.

About a year ago, I wrote a piece entitled Whither Corporate IT, where I reflected on what the commoditization of IT meant for those who earn their daily dollar in corporate IT. In that article, I presumed that commoditization is inevitable, thus leaving little or no room for in-house IT professionals as we know them. I say “presumed” because I had taken the inevitability of commoditisation to be a given – basically because Carr said so. Now, a year later, I’d like to take a closer look at that presumed inevitability because it is actually far from obvious that everything the IT crowd does (or should be doing!) can be commoditised as Carr suggests.

The commoditisation of IT has a long history. The evolution of the computer from the 27 tonne ENIAC to the featherweight laptop is but one manifestation of this: the former was an expensive, custom-built machine that needed an in-house supporting crew of several technicians and programmers whereas the latter is a product that can be purchased off the shelf in a consumer electronics store. More recently, IT services such as those provided by people (e.g. service desk) and software (e.g. email) have also been packaged and sold. In his 2003 article, and book published a little over a year ago, Carr extrapolates this trend towards “productising” technology to an extreme, where IT becomes a utility like electricity or water.

The IT-as-utility argument focuses on technology and packaged services. It largely ignores the creative ways in which people adapt and use technology to solve business problems. It also ignores the fact that software is easy to adapt and change. As Scott Rosenberg has noted in his book, Dreaming in Code

“…of all the capital goods in which businesses invest large sums, software is uniquely mutable. The gigantic software packages for CRM and ERP that occupy the lives of the CTOs and CIOs of big corporations may be cumbersome and expensive. But they are still made of “thought-stuff”. And so every piece of software that gets used gets changed as people decide they want to adapt it for some new purpose…”

Some might argue that packaged enterprise applications are rarely, if ever, modified by in-house IT staff. True. But it is also undeniable that no two deployments of an enterprise application are ever identical; each has its own characteristics and quirks. When viewed in the context of an organisation’s IT ecosystem – which includes the application mix, data, interfaces etc – this uniqueness is even more distinct. I would go so far as to suggest that it often reflects the unique characteristics and quirks of the organisation itself.

Perhaps an example is in order here:

Consider a company that uses a CRM application. The implementation and hosting of the application can be outsourced, as it often is. Even better, organisations can often purchase such applications as a service (this CRM vendor is a good example). The latter option is, in fact, a form of IT as a utility – the purchasing organisation is charged a usage-based fee for the service, in much the same way as one is charged (by the meter) for water or electricity. Let’s assume that our company has chosen this option to save costs. Things seem to be working out nicely: costs are down (primarily because of the reduction in IT headcount); the application works as advertised; there’s little downtime, and routine service requests are handled in an exemplary manner. All’s well with the world until…inevitably…someone wants to do something that’s not covered by the service agreement: say, a product manager wants to explore the CRM data for (as yet unknown) relationships between customer attributes and sales (aka data mining). The patterns that emerge could give the company a competitive advantage in the market. There is a problem, though. The product manager isn’t a database expert, and there’s no in-house technical expert to help her with the analysis and programming. To make progress she has to get external help. However, she’s uncomfortable with the idea of outsourcing this potentially important and sensitive work. Even with signed confidentiality agreements in place, would you outsource work that could give your organisation an edge in the market? May be you would if you had to, but I suspect you wouldn’t be entirely comfortable doing so.

My point: IT, if done right, has the potential to be much much more than just a routine service. The example related earlier is an illustration of how IT can give an organisation an edge over its competitors.

The view of IT as service provider is a limited one. Unfortunately, that’s the view that many business leaders have. The IT-as-utility crowd know and exploit this. The trade media, with their continual focus on new technology, only help perpetuate the myth. In order to exploit existing technologies in new ways to solve business problems – and to even see the possibilities of doing so – companies need to have people who grok technology and the business. Who better to do this than an IT worker? Typically, these folks have good analytical abilities and the smarts to pick up new skills quickly, two traits that make them ideal internal consultants for a business. OK, some of them may have to work on their communication skills – going by the stereotype of the communication-challenged IT guy – but that’s far from a show-stopper.

Of course this needs to be a two-way street; a collaboration between business and IT.

In most organisations there is rarely any true collaboration between IT and the business. The fault, I think, lies with those on both sides of the fence. Folks in IT are well placed to offer advice on how applications and data can be used in new ways, but often lack a deep enough knowledge of the business to do so in any meaningful way. Those who do have this knowledge are generally loath to venture forth and sell their ideas to the business – primarily because they’re not likely to be taken seriously. On the other hand, those on the business side do not appreciate how technology can be adapted to solve specific business problems. What’s needed to bridge this gap is an ongoing dialogue between the two sides at all levels in the organisation. This is possible only with executive support, which won’t be forthcoming until business leaders appreciate the advantages that internal IT groups can offer.

Once in place, IT-business collaboration can evolve further. In an opinion piece published in CIO magazine, Andew Rowsell-Jones describes four roles that IT can assume in a enterprise. These are:

- Transactional Organisation: Here IT is an “order taker”. The business asks for what it needs and IT delivers. In such a role, IT is purely a technology provider; innovations as such focus only on improving operational efficiency. This is basically the outdated IT-as-service (and not much else) view.

- Business partner: Here IT engages with the business; it understand business needs and provides a solution appropriate to business requirements.

- Consultant: This is takes IT engagement to the next level: IT understands business issues and technology trends, and feels free to suggest solutions that will help drive business success- much like an external business/technology consultant.

- Strategic: This is the semi-mythical place all IT departments want to be: In such organisations IT is viewed as an asset that plays an important role in developing, implementing and executing the organisation’s strategy.

[Note that levels (2) and (3) are qualitatively the same: A business partner who understands the business and is viewed as an adviser by the business is really a consultant.]

These roles can be seen as describing the evolution of an IT department as it moves from an operational to a strategic function.

Moving up this value chain does not mean latching on to the latest fad in an uncritical manner. Yes, one has to keep up with and evaluate new offerings and ideas. But that apart, shiny, new technologies are best left alone until proven (preferably by others!). Even when proven, they should be used only when it makes strategic sense to do so. Business strategies are seldom realised by shoehorning organisational processes into alleged “best practices” or new technologies. Instead, organisations would be better served by a change in how IT is viewed; from service provider to business partner or, even better, consultant and advisor.

IT is more about business problem solving and innovation than about technology. Corporate IT folks must realise, believe and live this, because only then will they be able to begin to convince their business counterparts that IT really does matter.

Capturing project knowledge using issue maps

Here’s a question that’s been on my mind for a while: Why do organisations find it difficult to capture and transmit knowledge created in projects?

I’ll attempt an answer of sorts in this post and point to a solution of sorts too – taking a bit of a detour along the way, as I’m often guilty of doing…

Let us first consider how knowledge is generated on projects. In project environments, knowledge is typically created in response to a challenge faced. The challenge may be the project objective itself, in which case the knowledge created is embedded in the product or service design. On the other hand it could be a small part of the project: say, a limitation of the technology used, in which case the knowledge might correspond to a workaround discovered by a project team member. In either case, the existence of an issue or challenge is a precondition to the creation of knowledge. The project team (or a subset of it) decides on how the issue should be tackled. They do so incrementally; through exploration, trial and error and discussion – creating knowledge in the bargain.

In a nutshell: knowledge is created as project members go through a process of discovery. This process may involve the entire team (as in the first example) or a very small subset thereof (as in the second example, where the programmer may be working alone). In both cases, though, the aim is to understand the problem, explore potential solutions and find the “best” one thus creating new knowledge.

Now, from the perspective of project and organisational learning, project documentation must capture the solution and the process by which it was developed. Typically, most documentation tends to focus on the former, neglecting the latter. This is akin to presenting a solution to a high school mathematics problem without showing the intervening steps. My high school maths teacher would’ve awarded me a zero for such an effort regardless of the whether or not my answer was right. And with good reason too: the steps leading up to a solution are more important than the solution itself. Why? Because the steps illustrate the thought processes and reasoning behind the solution. So it is with project documentation. Ideally, documentation should provide the background, constraints, viewpoints, arguments and counter-arguments that go into the creation of project knowledge.

Unfortunately, it seldom does.

Why is this so? An answer can be found in a farsighted paper, entitled Designing Organizational Memory: Preserving Intellectual Assets in a Knowledge Economy, published by Jeff Conklin 1997. In the paper Conklin draws a distinction between formal and informal knowledge; terms that correspond to the end-result and the process discussed in the previous paragraph.To quote from his paper, formal knowledge is the “…stuff of books, manuals, documents and training courses…the primary work product of the knowledge worker…” Conklin notes that, “Organisations routinely capture formal knowledge; indeed, they rely on it – without much success – as their organizational memory.” On the other hand, informal knowledge is, “the knowledge that is created and used in the process of creating formal results…It includes ideas, facts, assumptions, meanings, questions, decisions, guesses, stories and points of view. It is as important in the work of the knowledge worker as formal knowledge is, but it is more ephemeral and transitory…” As a consequence, informal knowledge is elusive; it rarely makes it into documents.

Dr. Conklin lists two reasons why organisations (which includes temporary organisations such as projects) fail to capture informal knowledge. These are:

- Business culture values results over process. In his words, “One reason for the widespread failure to capture informal knowledge is that Western culture has come to value results – the output of work process – far above the process itself, and to emphasise things over relationships.”

- The tools of knowledge workers do not support the process of knowledge work – that is, most tools focus on capturing formal knowledge (the end result of knowledge work) rather than informal knowledge (how that end result was achieved – the “steps” in the solution, so to speak).

The key to creating useful documentation thus lies in capturing informal knowledge. In order to do that, this elusive form of knowledge must first be made explicit – i.e. expressible in words and pictures. Here’s where the notion of shared understanding, discussed in my previous post comes into play.

To quote again from Conklin’s paper, “One element of creating shared understanding is making informal knowledge explicit. This means surfacing key ideas, facts, assumptions, meanings, questions, decisions, guesses, stories, and points of view. It means capturing and organizing this informal knowledge so that everyone has access to it. It means changing the process of knowledge work so that the focus is on creating and managing a shared display of the group’s informal thinking and learning. The shared display is the transparent vehicle for making informal knowledge explicit.”

The notion of a shared display is central to the technique of dialogue mapping, a group facilitation technique that can help a diverse group achieve a shared understanding of a problem. Dialogue mapping uses a visual notation called IBIS (short for Issue Based Information System) to capture the issues, ideas and arguments that come up in a meeting (see my previous post for a quick introduction to dialogue mapping and a crash-course in IBIS). As far as knowledge capture is concerned, I see two distinct uses of IBIS . They are:

- As Conklin suggests, IBIS maps can be used to surface and capture informal knowledge in real-time, as it is being generated in a discussion between two or more people.

- It can also be used in situations where one person researches and finds a solution to a challenge. In this case the person could use IBIS to capture background, approaches tried, the pros and cons of each – i.e. the process by which the solution was arrived at.

This, together with the formal stuff – which most project organisations capture more than adequately – should result in documents that provide a more complete view of the knowledge generated in projects.

Now, in a previous post (linked above) I discussed an example of how dialogue mapping was used on a real-life project challenge: how best to implement near real-time updates to a finance data mart. The final issue map, which was constructed using a mapping tool called Compendium, is reproduced below:

Example Issue Map

The map captures not only the decisions made, but also the options discussed and the arguments for and against each of the options. As such, it captures the decision along with the context, process and rationale behind it. In other words, it captures formal and informal knowledge pertaining to the decision. In this simple case, IBIS succeeded in making informal knowledge explicit. Project teams down the line can understand why the decision was made, from both a technical and business perspective.

It is worth noting that Compendium maps can reference external documents via Reference Nodes, which, though less important for argumentation, are extremely useful for documentation. Here is an illustration of how references to external documents can be inserted in an IBIS map through reference node:

Links to external docs

As illustrated, this feature can be used to link out to a range of external documents – clicking on the reference node in a map (not the image above!) opens up the external document. Exploiting this, a project document can operate at two levels: the first being an IBIS map that depicts the relationships between issues, ideas and arguments, and the second being supporting documents that provide details on specific nodes or relationships. Much of the informal knowledge pertaining to the issue resides in the first level – i.e. in the relationships between nodes – and this knowledge is made explicit in the map.

To conclude, it is perhaps worth summarising the main points of this post. Projects generate new knowledge through a process in which issues and ideas are proposed, explored and trialled. Typically, most project documentation captures the outcome (formal knowledge), neglecting much of the context, background and process of discovery (informal knowledge). IBIS maps offer the possibility of capturing both aspects of knowledge, resulting in a greatly improved project (and hence organisational) memory.

Issues, Ideas and Arguments: A communication-centric approach to tackling project complexity

I’ve written a number of posts on complexity in projects, covering topics ranging from conceptual issues to models of project complexity. Despite all that verbiage, I’ve never addressed the key issue of how complexity should be handled. Methodologists claim, with some justification, that complexity can be tamed by adequate planning together with appropriate controlling and monitoring as the project progresses. Yet, personal experience – and the accounts of many others – suggests that the beast remains untamed. A few weeks ago, I read this brilliant series of articles by Paul Culmsee, where he discusses a technique called Dialogue Mapping which, among other things, may prove to be a dragon-slayer. In this post I present an overview of the technique and illustrate its utility in real-life project situations.

First a brief history: dialogue mapping has its roots in wicked problems – problems that are hard to solve, or even define, because they satisfy one or more of these criteria. (Note that there is a relationship between problem wickedness and project complexity: projects that set out to address or solve wicked problems are generally complex but the converse is not necessarily true – see this post for more). Over three decades ago, Horst Rittel – the man who coined the term “wicked problem” – and his colleague Werner Kunz developed a technique called Issue Based Information System (IBIS) to aid in the understanding of such problems. IBIS is based on the premise that wicked problems – or any contentious issues – can be understood by discussing them in terms of three essential elements: issues (or questions), ideas (or answers) and arguments (for or against ideas). IBIS was subsequently refined over the years by various research groups and independent investigators. Jeff Conklin, the inventor of dialogue mapping, was one of the main contributors to this effort.

Now, the beauty of IBIS is that it is very easy to learn. Basically it has only the three elements mentioned earlier – issues, ideas and arguments – and these can be connected only in ways specified by the IBIS grammar. The elements and syntax of the language can be illustrated in half a page – as I shall do in a minute. Before doing so, I should mention that there is an excellent, free software tool – Compendium – that supports the IBIS notation. I use it in the discussion and demo below. I recommend that you download and install Compendium before proceeding any further.

Go on, I’ll wait for you…

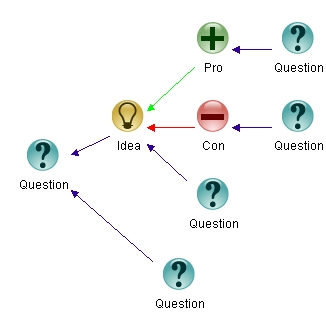

OK, let’s begin. IBIS has three elements which are illustrated in the Compendium map below:

Figure 1: IBIS Elements

The elements are:

Question: an issue that’s being discussed or analysed. Note that the term “question” is synonymous with “issue”

Idea: a response to a question. An idea responds to a question in the sense that it offers a potential resolution or clarification of the question.

Argument: an argument in favour of or against an idea (a pro or a con)

The arrows show links or relationships between elements.

That’s it as far as elements of IBIS are concerned.

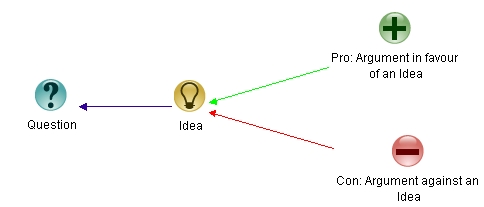

The IBIS grammar specifies the legal ways in which elements can be linked. The rules are nicely summarised in the following diagram:

In a nutshell, the rules are:

- Any element (question, idea or agument) can be questioned.

- Ideas respond to questions.

- Arguments make the case for and against ideas. Note that questions cannot be argued!

Simple, isn’t it? Essentially that’s all there is to IBIS.

So what’s IBIS got to do with dialogue mapping? Well, dialogue mapping is facilitation of a group discussion using a shared display – a display that everyone participating in the discussion can see and add to. Typically the facilitator drives – i.e. modifies the display – seeking input from all participants, using the IBIS notation to capture the issues, ideas and arguments that come up. . This synchronous, or real-time, application of IBIS is described in Conklin’s book, Dialogue Mapping: Building Shared Understanding of Wicked Problems (An absolute must-read if you manage on complex projects with diverse stakeholders). For completeness, it is worth pointing out that IBIS can also be used asynchronously – for example, in dissecting arguments presented in papers and articles. This application of IBIS – which is essentially dialogue mapping minus facilitation – is sometimes called issue mapping.

I now describe a simple but realistic application of dialogue mapping, adapted from a real-life case. For brevity, I won’t reproduce the entire dialogue. Instead I’ll describe how a dialogue map develops as a discussion progresses. The example is a simple illustration of how IBIS can be used to facilitate a shared understanding (and solution) of a problem.

All set? OK, let’s go…

The situation: Our finance data mart is updated overnight through a batch job that takes a few hours. This is good enough for most purposes. However, a small (but very vocal!) number of users need to be able to report on transactions that have occurred within the last hour or so – waiting until the next day, especially during month-end, is simply not an option. The dev team had to figure out the best way to do this.

The location: my office.

The players: two colleagues from IT, one from finance, myself.

The shared display: Compendium running on my computer, visible to all the players.

The discussion was launched with the issue stated up-front: How should we update our data mart during business hours? My colleagues in the dev team came up with several ideas to address the issue. After capturing the issue and responding ideas, the map looked like this:

Figure 3: Map - stage 1

In brief, the options were to:

- Use our messaging infrastructure to carry out the update.

- Write database triggers on transaction tables. These triggers would update the data mart tables directly or indirectly.

- Write custom T-SQL procedures (or an SSIS package) to carry out the update (the database is SQL Server 2005).

- Run the relevant (already existing) Extract, Transform, Load (ETL) procedures at more frequent intervals – possibly several times during the day.

As the discussion progressed, the developers raised arguments for and against the ideas. A little later the map looked like this:

Figure 4: Map - stage 2

Note that data latency refers to the time interval between when the transaction occurs and its propagation to the data mart, and complexity is a rough measure of the effort required (in time) to implement that option. I won’t go through the arguments in detail, as they are largely self-explanatory.

The business rep then asked how often the ETL could be run.

“The relevant portions can be run hourly, if you really want to,” replied our ETL queen.

“That’s good enough,” said the business rep.

…voilà, we had a solution!

The final map looked much like the previous one: the only additions were the business rep’s question, the developer’s response and a node marking the decision made:

Figure 5: Final Map

Note that a decision node is simply an idea that is accepted (by all parties) as a decision on the issue being discussed.

Admittedly, my little example is nowhere near a complex project. However, before dismissing it outright, consider the following benefits afforded by the process of dialogue mapping:

- Everyone’s point of view was taken into account.

- The shared display served as a focal point of the discussion: the entire group contributed to the development of the map. Further, all points and arguments made were represented in the map.

- The display and discussion around it ensured a common (or shared) understanding of the problem.

- Once a shared understanding was achieved – between the business and IT in this case – the solution was almost obvious.

- The finished map serves as an intuitive summary of the discussion – any participant can go back to it and recall the essential structure of the discussion in a way that’s almost impossible through a written document. If you think that’s a tall claim, here’s a challenge: try reconstructing a meeting from the written minutes.

Enough said, I think.

But perhaps my simple example leaves you unconvinced. If so, I urge you to read Jeff Conklin’s reflections on an “industrial strength” case-study of dialogue mapping. Despite my limited practical experience with the technique, I believe it is an useful way to address issues that arise on complex projects, particularly those involving stakeholders with diverse points of view. That’s not to say that it is a panacea for project complexity – but then, nothing is. From a purely pragmatic perspective, it may be viewed as an addition to a project manager’s tool-chest of communication techniques. For, as I’ve noted elsewhere, “A shared world-view – which includes a common understanding of tools, terminology, culture, politics etc. – is what enables effective communication within a (project) group.” Dialogue mapping provides a practical means to achieve such a shared understanding.