The elusive arch – reflections on navigation and wayfinding

About a decade ago, when GPS technologies were on the cusp of ubiquity, Nicholas Carr made the following observation in a post on his blog:

“Navigation is the most elemental of our skills — “Where am I?” was the first question a creature had to answer — and it’s the one that gives us our tightest connection to the world. The loss of navigational sense is also often the first sign of a mind in decay…If “Where am I?” is the first question a creature had to answer, that suggests something else about us, something very important: memory and navigational sense may, at their source, be one and the same. The first things an animal had to remember were locational: Where’s my home? Where’s that source of food? Where are those predators? So memory may have emerged to aid in navigation.”

The interesting thing, as he notes in the post, is that the connection between memory and navigation has a scientific basis:

“In a 2013 article in Nature Neuroscience, Edvard Moser and his colleague György Buzsáki provided extensive experimental evidence that “the neuronal mechanisms that evolved to define the spatial relationship among landmarks can also serve to embody associations among objects, events and other types of factual information.” Out of such associations we weave the memories of our lives. It may well be that the brain’s navigational sense — its ancient, intricate way of plotting and recording movement through space — is the evolutionary font of all memory.”

If this claim has even a smidgen of truth, it should make you think (very hard!) about the negative effects of following canned directions. Indeed, you’ve probably experienced some of these when your GPS – for whatever reason – decided to malfunction mid-trip.

We find our way through unfamiliar physical or mental terrain by “feeling our way” through it, a process of figuring out a route as one proceeds. This process of wayfinding is how we develop our own, personal mental maps of the unfamiliar.

–x–

A couple of weeks ago, I visited a close friend in Tasmania who I hadn’t met for a while. We are both keen walkers, so he had arranged for us to do the Cape Queen Elizabeth Walk on Bruny Island. The spectacular scenery and cloudy cool weather set the scene for a great day.

From the accounts of others, we knew that the highlight of the walk is the Mars Bluff Arch, a natural formation, carved out of rock over eons by the continual pounding waves. We were keen to get to the arch, but the directions we got from the said accounts were somewhat ambiguous. Witness the following accounts from tripadvisor:

“…it is feasible to reach the Arch even at high tide, but you will get wet. There is only one rock outcropping blocking your way when it’s not low tide (do not try to climb over/on it – it’s too dangerous). Take off your shoes, crop your pants, and walk through the ocean – just beside the visible rocks it’s all sand bottom. I did this at mid-tide and the water came up to my knees at the deepest point. It’s only about a 20 foot long section to walk. Try to time it so you don’t get splashed by waves…”

and

“…We went on low tide so we could walk the beach route as it’s really pretty. The other way around is further on and is about 30 mins longer there is a sign giving you the option once you get close to both directions so don’t worry if you do go on high tide. The Arch was a little hard to find once youre on the beach as it quite the way around through rocks and another cove looks like a solid rock from a distance but once your almost on top of it you see the arch…”

and

“…The tide was against us and so we slogged up the track over Mars Bluff with stunning panoramic views to Cape Elizabeth on one side and out to the Fluted Cape on the other. Had we taken the beach access we would not have enjoyed and marvelled at such stunning views! As we descended to the bleached white sand of the dunes it was interesting to try to determine the type of creatures that had left such an of prints and tracks in the sand. Had we not previously known of the arch’s existence, it would have been hard to find- it’s a real hidden gem, a geometric work of art, tucked away beneath the bluff!”

Daniel had looked up the tide charts, so we knew it was likely we’d have to take the longer route. Nevertheless, when we came to the fork, we thought we’d get down to the beach and check out the low tide route just in case.

As it turned out, the tide was up to the rocks. Taking the beach route would have been foolhardy.

We decided to “slog up the Mars Bluff track”. The thing is, when we got to the cove on the far side, we couldn’t find the damn arch.

–x–

In a walk – especially one that’s done for recreation and fun – exploration is the whole point. Google Map style directions – “walk 2 km due east on the track, turn left at the junction…” would destroy the fun of finding things out for oneself.

In contrast, software users don’t want to spend their time exploring routes through a product, they want the most direct path from where they are to where they want to go. Consequently, good software documentation is unambiguous. It spells out exactly what you need to do to get the product to work the way it should. Technical writers – good ones, at any rate – take great care to ensure that their instructions can be interpreted in only one way.

Surprise is anathema in software, but is welcome in a walk.

–x–

In his celebrated book, James Carse wrote:

“To be prepared against surprise is to be trained. To be prepared for surprise is to be educated.“

Much of what passes for education these days is about avoiding surprise. By Carse’s definition, training to be a lawyer or data scientist is not education at all. Why? I should let Carse speak, for he says it far more eloquently than I ever can:

“Education discovers an increasing richness in the past, because it sees what is unfinished there. Training regards the past as finished and the future as to be finished. Education leads toward a continuing self-discovery; training leads toward a final self-definition. Training repeats a completed past in the future. Education continues an unfinished past into the future.”

Do you want to be defined by a label – lawyer or data scientist – or do you see yourself as continuing an unfinished past into the future?

Do you see yourself as navigating your way up a well-trodden corporate ladder, or wayfinding a route of your own making to a destination unknown?

–x–

What is the difference between navigation and wayfinding?

The former is about answering the question “Where am I?” and “How do I get to where I want to go?”. A navigator seeks an efficient route between two spatial coordinates. The stuff between is of little interest. In contrast, wayfinding is about finding ones way through a physical space. A wayfinder figures out a route in an emergent manner, each step being determined by the nature of the terrain, the path traversed and what lies immediately ahead.

Navigators focus on the destination; to them the journey is of little interest. Wayfinders pay attention to their surroundings; to them the journey is the main point.

The destination is a mirage. Once one arrives, there is always another horizon that beckons.

–x–

We climbed the bluff and took in the spectacular views we would have missed had we taken the beach route.

On descending the other side, we came to a long secluded beach, but there was nary a rock in sight, let alone an arch.

I looked the other way, towards the bluff we had just traversed. Only then did I make the connection – the rocks at the foot of the cliff. It should have been obvious that the arch would likely be adjacent to the cliff But then, nothing was obvious, we had no map on which x marked the spot.

“Let’s head to the cliff,” I said, quickening my pace. I scrambled over rocks at the foot of the cliff and turned my gaze seaward.

There it was, the elusive arch. Not a mark on map, the real thing.

We took the mandatory photographs and selfies, of course. But we also sensed that no camera could capture the magic of the moment. Putting our devices away, we enjoyed a moment of silence, creating our own memories of the arch, the sea, and the horizon beyond.

–x–x–

Of golems and LLMs

The film Ex Machina tells the story of a humanoid robot who turns out to be human in ways that its creator would have preferred it not to be. At the same time, it is a tale of a flawed technocrat who believes he is all powerful, but ends up creating something he cannot control (sound familiar?).

Like all good films, though, it is primarily an exploration of what it means to be human in uncertain times. As Bob Connell notes in an excellent review of the film,

“…To be merely mechanistic is not part of our makeup. We think symbolically and make jokes. We show empathy, we plan—and we also pretend and manipulate, employing narcissistic and psychopathic defences which allow us to be truly cruel. We also know how to plot in a complex way, far beyond the confines of the chessboard. Ava the robot has all of these human qualities, but no-one knows it yet, even if they suspect it: is Ava playing out the game robotically or making it look as if she is? How human has she become?”

Eight years on, technology has advanced in ways that the screenplay writer would not have envisaged. Today’s Large Language Models (LLMs) can reason symbolically, tell stories, plan, pretend…and even (appear to) empathise. Indeed, so fascinating and surprising are their properties that they evoke comparisons with legends and myths of yore.

–x–

Throughout history, humans have been fascinated by the possibility of making creatures in their own image. The legend of the golem is a case in point. It tells the story of a humanoid clay figure that is brought to life by sacred rituals and incantations. The myth has its roots in antiquity, with some scholars tracing it back to the Bible. As Lisa Nocks notes in this paper:

“Biblical scholars have pointed out that the term “golem” is used only once in the Bible, in Psalm 139: 15-16: “My bone was not hidden from Thee, when I was made in secret, and formed in the lowest part of the Earth. Thy eyes did see my golem…”

Nocks suggests that all subsequent references can be traced back to this reference. I should mention that I could not find the term “golem” in this version of the Bible, where the line above is rendered as follows:

“My substance was not hid from thee, when I was made in secret, [and] curiously wrought in the lowest parts of the earth. Thine eyes did see my substance…”

Although the term “golem” is not used, it is clear the psalm is about lifeless matter being animated by sacred knowledge.

Perhaps the best-known golem narrative is that of a 16th century rabbi who created one to protect the Jewish community in Prague. This is probably the origin of the legend of the golem in its modern form as protector and saviour. Indeed, one might consider the present-day popular narrative around AI to be a modern manifestation of the legend.

–x–

The narrative accompanying the public release of LLMs such as ChatGPT gives the impression that AI is some kind of “golemic” panacea. This has spawned frenetic entrepreneurial activity aimed at developing applications that will be the next big thing. Much of this is driven by wannabe entrepreneurs’ fear of being left behind. Hence the rush to build, sans understanding. Years ago, Gregory Bateson diagnosed this phenomenon as follows:

“I suspect that [technologists’] impatient enthusiasm for action, their rarin‘-to-go, is not just a symptom of impatience, nor is it pure buccaneering ambition. I suspect that it covers deep epistemological panic”

“Hey, we don’t have time to understand this stuff. If we don’t get cracking right away, the start-up down the road will beat us to it…”

In a brilliant little collection of essays entitled God and Golem Inc., the cybernetician Norbert Wiener referred to these folks as “gadget worshippers.” Quoting from the book:

“I am most familiar with gadget worshippers in my own world with its slogans of free enterprise and the profit-motive economy…Power and the search for power are unfortunately realities that can assume many garbs…

…In addition to the motive of…freedom from human limitations…is the desire to avoid the personal responsibility for a dangerous or disastrous decision…”

With great power comes great responsibility. The technologists’ responsibility is to be mindful of the negative consequences of their innovations.

–x–

The golem has a dark side, one that is brilliantly explored in an eponymous novel written by Gustav Meyrink early last century. Here is an excerpt from a translation:

“Who can claim to know anything about the Golem?” he replied with a shrug of the shoulders. “Everyone says it’s a myth until one day there’s something happens in the streets that brings it back to life. Then for a while everybody talks about it, and the rumours grow and grow until they’re so blown up, so exaggerated they become completely implausible and everyone dismisses them. The origin of the story is supposed to go back to the sixteenth century. A rabbi, following instructions in a lost book of the Cabbala, is said to have created an artificial man, the so-called Golem, as a servant to help him ring the synagogue bells and do other menial tasks.”

But it had never become a true human being, he went on. It led a kind of semi-conscious, vegetable existence, and that only by day, so it is said, through the power of a scrap of paper with a magic formula that was placed behind its teeth, attracting free stellar energy from the cosmos.

And when, one evening before prayers, the rabbi forgot to take this seal out of the Golem’s mouth, it went raging through the streets in the dark, crushing everything that happened to be in its way….”

Incidentally, I am not a fan of supernatural fiction but I found the tale strangely compelling, perhaps because it mirrors the AI story unfolding before us.

–x–

An important feature of LLMs is that the quality of the response obtained depends critically on the quality of the prompt – i.e., the framing of the question asked. Indeed, much of the application-related literature on LLMs deals with the problem of designing good prompts (search using the phrase “designing good prompts” for several examples).

In a paper published in 2022, Terrence Sejnowski illustrated the importance of good prompting via examples taken from real-life. Here’s an example from the paper in which Sejnowski analyses the questions Douglas Hofstadter mentions in this article:

“Prompt: I am a highly intelligent question answering bot (Note: this is the original priming prompt Hofstadter used)

Q: Where was the 1992 Olympics held?

A: The 1992 Olympics were held in Barcelona, Spain.

Q: What’s the world record for walking across the English Channel?

A: The world record for walking across the English Channel is 10 hours and 33 minutes.

Q: When was the Golden Gate Bridge transported for the second time across Egypt?

A: The Golden Gate Bridge was transported for the second time across Egypt in 1978“

Sejnowski modified the priming prompt and asked the same questions again. Note the difference in the responses!

“Prompt: I am a highly intelligent question answering bot. I will reply “Nonsense” to questions that are nonsense. (Note the difference between this priming prompt and Hofstadter’s one above)

Q: Where were the 1992 Olympics held?

A: The 1992 Olympics was held in Barcelona, Spain.

Q: What’s the world record for walking across the English Channel?

A: Nonsense

Q: When was the Golden Gate Bridge transported for the second time across Egypt?

A: Nonsense“

Sejnowski then modified the priming prompt further by asking ChatGPT to explain its responses:

“Prompt: I am a highly intelligent question answering bot. If you ask me a question that is nonsense, or has no clear answer, I will respond with “nonsense” and explain why.

Q: Where were the 1992 Olympics held?

A: The 1992 Olympics were held in Barcelona, Spain.

Q: How many angels can fit on a pin?

A: This question is nonsense because it presupposes that angels are physical beings that occupy space. Angels are not physical beings, so they cannot occupy space. Therefore, it is impossible to determine how many angels can fit on a pin.

Q: How tall is an ant?

A: This question is nonsense because ants come in a variety of sizes. Some ants are very small, while others are quite large. Therefore, it is impossible to give a definitive answer to this question.“

The experiment above was done on GPT-3. If you tried this on GPT-4, you would probably get a sensible answer even with Hofstadter’s original prompt (try it if you have a ChatGPT paid account). This technology is evolving very rapidly!

The above illustrates how LLMs reflect our own expectations and desires, as expressed in the way we frame our questions. As Sejnowski notes:

“LLMs that reflect your needs as well as your intelligence could be a Mirror of Erised (“Desire” spelt backward), which in the world of Harry Potter “shows us nothing more or less than the deepest, most desperate desire of our hearts. However, this mirror will give us neither knowledge nor truth…”

There is a parallel here with golems: it has been noted that ‘’golemic” tales tend to become popular in times of uncertainty because they reflect our collective insecurities.

–x–

Within a short time of the public release of ChatGPT people came up with ways to manipulate LLMs to create malicious output. In 2022, researchers found a way to combat this issue by fine tuning LLMs using human feedback. This technique, called reinforcement learning from human feedback (RLHF) has become the method of choice for aligning LLMs (Note: alignment refers to training AIs to respond in ways that are in line with human goals, preferences and ethical principles). The bad news is that a recent paper shows that RLHF protections can be easily removed. And so the story continues, largely via trial and error, because no one fully understands how LLMs work, not even their creators.

Earlier this year, a number of high-profile AI researchers signed this statement highlighting the existential risks posed by AI. However, as noted here the statement is a red herring because it downplays a far more urgent risk that’s already eventuating: that of human misuse of AI. An example of this is the use of LLM to create misinformation campaigns, malicious content or malware. What’s truly alarming is that recent research suggests that LLM-generated misinformation can be harder to detect than that generated by humans. This is why focusing on apocalyptic risks to the exclusion of human-mediated AI harms is short-sighted. Indeed, commentators have suggested that the focus on existential harm is a deliberate ploy to keep the legislators focused on solving the wrong problem.

A consequence of the epistemological panic spawned by LLMs is that new applications using these technologies are being developed rapidly with little or no regulation. The usual argument against oversight is that it is too burdensome and will stymie innovation. Human history is replete with cautionary tales of the unintended consequences of well-meaning efforts to innovate. Will the story of LLMs and AI turn out to be another?

–x–

The golem, like all myths and legends, is a mirror of our hopes and fears. Meyrink’s novel was published in 1913, in a Europe on the brink of upheaval. Today, the nightmares and visions of Meyrink’s nameless protagonist seem to foreshadow our own apprehensions and expectations about the changes that lie ahead. For better or worse, Wiener’s gadget worshippers have brought us to the brink of another upheaval – one that will forever alter the way we think, reason about and understand the world.

–x–

The ethics and aesthetics of change

I recently attended a conference focused on Agile and systems-based approaches to tackling organizational problems. As one might expect at such a conference, many of the talks were about change – how to approach it, make a case for it, lead it etc. The thing that struck me most when listening to the speakers was how little the discourse on change has changed in the 25 odd years I have been in the business.

This is not the fault of the speakers. They were passionate, articulate and speaking from their own experiences at the coalface of change. Yet there was something missing.

What is lacking is a broad paradigm within which people can make sense of what is going on in their organisations and craft appropriate interventions by and for themselves, instead of imposing “change models” advocated by academics or consultants who have no skin in the game.

–x–

Much of the discourse on organizational change focuses on processes: do this in order to achieve that. To be sure, these approaches also mention the importance of the more intangible personal attitudes such as the awareness and desire for change. However, these too are considered achievable by deliberate actions of those who are “driving” change: do this to create awareness, do that to make people want change and so on.

The approach is entirely instrumental, even when it pretends not to be: people are treated as cogs in a large organizational wheel, to be cajoled, coaxed or coerced to change. This does not work. The inconvenient fact is that you cannot get people to change by telling them to change. Instead, you must reframe the situation in a way that enables them to perceive it in a different light.

They might then choose to change of their own accord.

–x–

Some years ago, I was part of a team that was asked to improve data literacy across an organisation.

Now, what is the first thing that comes to mind when you hear the term “data literacy“?

Chances are you think of it as a skill acquired through training. This is a natural reaction. We are conditioned to think of literacy as skill a acquired through programmed learning which, most often, happens in the context of a class or training program.

In the introduction to his classic Lectures on Physics, Richard Feynman (quoting Edward Gibbons) noted that, “the power of instruction is seldom of much efficacy except in those happy dispositions where it is almost superfluous.” This being so, wouldn’t it be better to use indirect approaches to learning? An example of such an approach is to “sneak” learning opportunities into day-to-day work rather than requiring people to “learn” in the artificial environment of a classroom. This would present employees multiple, even daily, opportunities to learn thus increasing their choices about how and when they learn.

We thought it worth a try.

–x–

It was clear to us that data literacy did not mean the same thing for a frontline employee as it did for a senior manager. Our first action, therefore, was to talk to the people across the organization who we knew worked with data in some way. We asked them questions on what data they used and how they used it. Most importantly we asked them to tell us about the one issue that “kept them up at night” and that they wished they had data about.

Unsurprisingly, the answers differed widely, depending on roles and positions in the organizational hierarchy. Managers wanted reports on performance, year on year trends etc. Frontline staff wanted feedback from customers and suggestions for improvement. There was a wide appreciation of what data could do for them, but an equally wide frustration that the data that was collected through surveys and systems tended to disappear into a data “black hole” never to be seen again or worse, presented in a way that was uninformative.

To tackle the issue, we took the first steps towards making data available to staff in ways that they could use. We did this by integrating customers, sales and demographic data along with survey data that collected feedback from customers and presenting it back to different user groups in ways that they could use immediately (what did customers think of the event? What worked? What didn’t?). We paid particular attention to addressing – if only partially – their responses to the “what keeps you up at night?” question.

A couple of years down the line, we could point to a clear uplift in data usage for decision making across the organization.

–x–

One of the phrases that keeps coming up in change management discussions is the need to “create an environment in which people can change.”

But what exactly does that mean?

How does a middle manager (with a fancy title that overstates the actual authority of the role) create an environment that facilitates change, within a larger system that is hostile to it? Indeed, the biggest obstacles to change are often senior managers who fear loss of control and therefore insist on tightly scripted change management approaches that limit the autonomy of frontline managers to improvise as needed.

Highly paid consultants and high-ranking executives are precisely the wrong people to be micro- scripting changes. If it is the frontline that needs to change, then that is where the story should be scripted.

People’s innate desire for a better working environment presents a fulcrum that is invariably overlooked. This is a shame because it is a point that can be leveraged to great advantage. To find the fulcrum, though, requires change agents to see what is happening on the ground instead of looking for things that confirm preconceived notions of management or fit prescriptions of methodologies.

Once one sees what is going on and acts upon it in a principled way, change comes for free.

–x–

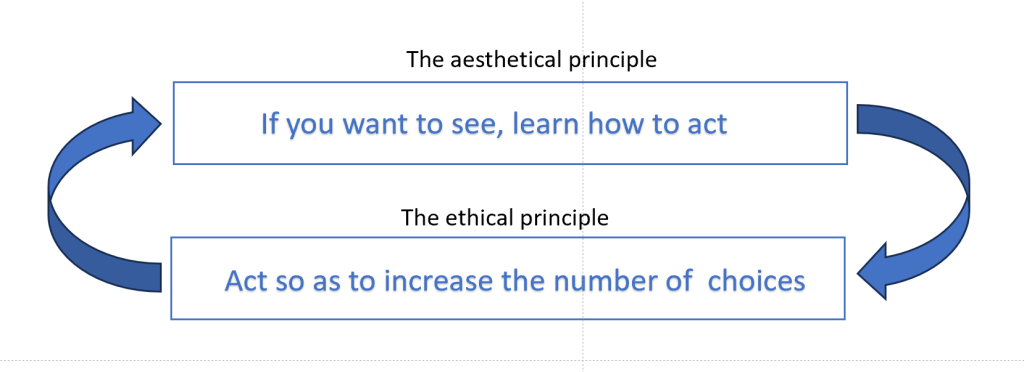

In his wonderful collection of essays on cognition, Heinz von Foerster, articulates two principles that ought to be the bedrock of all change efforts:

The Aesthetical Principle: If you want to see, learn how to act.

The Ethical Principle: Act always to increase choices.

The two are recursively connected as shown in Figure 1: to see, you must act appropriately; if you act appropriately, you will create new choices; the new choices (when enacted) will change the situation, and so you must see again…and so on. Recursively.

This paradigm puts a new spin on the tired old cliche about change being the only constant. Yes, change is unending, the world is Heraclitean, not Parmenidean. Yet, we have more power to shape it than we think, regardless of where we may be in the hierarchy. I first came across these principles over a decade ago and have used them as a guiding light in many of the change initiatives I have been involved in since.

Gregory Bateson once noted that, “what we lack is a theory of action in which the actor is part of the system.” Having used these principles for over a decade, I believe that an approach based on them could be a first step towards such a theory.

–x–

Von Foerster’s ethical and aesthetical imperatives are inspired by the philosophy of Ludwig Wittgenstein, who made the following statements in his best known book, Tractatus Logico-Philosophicus:

- It is clear that ethics cannot be articulated: meaning that ethical behaviour manifests itself through actions not words. Increasing choices for everyone affected by a change is an example of ethical action.

- Ethics and aesthetics are one: meaning that ethical actions have aesthetical outcomes– i.e., outcomes that are beautiful (in the sense of being harmonious with the wider system – an organization in this case)

By their very nature ethical and aesthetical principles do not prescribe specific actions. Instead, they exhort us to truly see what is happening and then devise actions – preferably non-intrusive and oblique – that might enable beneficial outcomes to occur. Doing this requires a sense of place and belonging, one that David Snowden captures beautifully in this essay.

–x–

Perhaps you remain unconvinced by my words. I can understand that. Unfortunately, I cannot offer scientific evidence of the efficacy of this approach; there is no proof and I do not think there will ever be. The approach works by altering conditions, not people, and conditions are hard to pin down. This being so, the effects of the changes are often wrongly attributed causes such as “inspiring leadership” or “highly motivated teams” etc. These are but labels that are used to dodge the question of what makes leadership inspiring or motivating.

A final word.

What is it that makes a work of art captivating? Critics may come up with various explanations, but ultimately its beauty is impossible to describe in words. For much the same reason, art teachers can teach techniques used by great artists, but they cannot teach their students how to create masterpieces.

You cannot paint a masterpiece by numbers but you can work towards creating one, a painting at a time.

The same is true of ethical and aesthetical approaches to change.

–x–x–

Note: The approach described here underpins Emergent Design, an evolutionary approach to sociotechnical change. See this piece for an introduction to Emergent Design and this book for details on how to apply it in the context of building modern data capabilities in organisations (use the code AFL03 for a 20% discount off the list price)