The effect of task duration correlations on project schedules – a study using Monte Carlo simulation

Introduction

Some time ago, I wrote a couple of posts on Monte Carlo simulation of project tasks: the the first post presented a fairly detailed introduction to the technique and the second illustrated its use via three simple examples. The examples in the latter demonstrated the effect of various dependencies on overall completion times. The dependencies discussed were: two tasks in series (finish-to-start dependency), two tasks in series with a time delay (finish-to-start dependency with a lag) and two tasks in parallel (start-to-start dependency). All of these are dependencies in timing: i.e. they dictate when a successor task can start in relation to its predecessor. However, there are several practical situations in which task durations are correlated – that is, the duration of one task depends on the duration of another. As an example, a project manager working for an enterprise software company might notice that the longer it takes to elicit requirements the longer it takes to customise the software. When tasks are correlated thus, it is of interest to find out the effect of the correlation on the overall (project) completion time. In this post I explore the effect of correlations on project schedules via Monte Carlo simulation of a simple “project” consisting of two tasks in series.

A bit about what’s coming before we dive into it. I begin with a brief discusssion on how correlations are quantified. I then describe the simulation procedure, following which I present results for the example mentioned earlier, with and without correlations. I then present a detailed comparison of the results for the uncorrelated and correlated cases. It turns out that correlations increase uncertainty. This seemed counter-intuitive to me at first, but the simulations helped me see why it is so.

Note that I’ve attempted to keep the discussion intuitive and (largely) non-mathematical by relying on graphs and tables rather than formulae. There are a few formulae but most of these can be skipped quite safely.

Correlated project tasks

Imagine that there are two project tasks, A and B, which need to be performed sequentially. To keep things simple, I’ll assume that the durations of A and B are described by a triangular distribution with minimum, most likely and maximum completion times of 2, 4 and 8 days respectively (see my introductory Monte Carlo article for a detailed discussion of this distribution – note that I used hours as the unit of time in that post). In the absence of any other information, it is reasonable to assume that the durations of A and B are independent or uncorrelated – i.e. the time it takes to complete task A does not have any effect on the duration of task B. This assumption can be tested if we have historical data. So let’s assume we have the following historical data gathered from 10 projects:

| Duration A (days)) | duration B (days) |

| 2.5 | 3 |

| 3 | 3 |

| 7 | 7.5 |

| 6 | 4.5 |

| 5.5 | 3.5 |

| 4.5 | 4.5 |

| 5 | 5.5 |

| 4 | 4.5 |

| 6 | 5 |

| 3 | 3.5 |

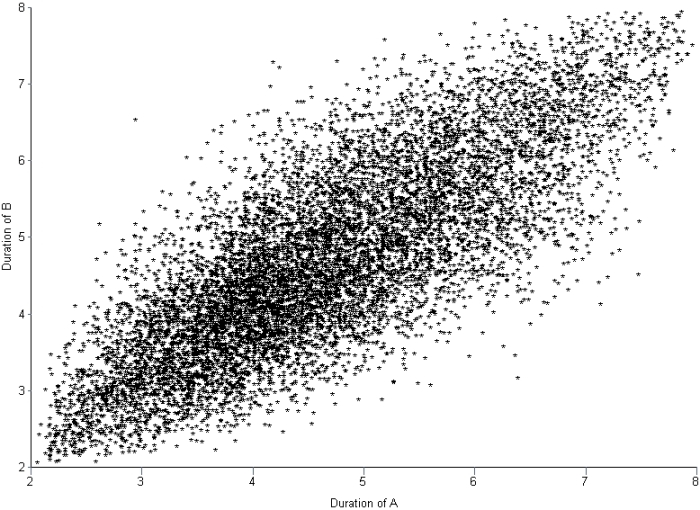

Figure 1 shows a plot of the duration of A vs. the duration of B. The plot suggests that there is a relationship between the two tasks – the longer A takes, the chances are that B will take longer too.

In technical terms we would say that A and B are positively correlated (if one decreased as the other increased, the correlation would be negative).

There are several measures of correlation, the most common one being Pearson’s coefficient of correlation which is given by

In this case and

are the durations of the tasks A and B the

th time the project was performed,

the average duration of A,

the average duration of B and

the total number of data points (10 in this case). The capital sigma (

) simply denotes a sum from 1 to N.

The Pearson coefficient, can vary between -1 and 1: the former being a perfect negative correlation and the latter a perfect positive one [Note: The Pearson coefficient is sometimes referred to as the product-moment correlation coefficient]. On calculating for the above data, using the CORREL function in Excel, I get a value of 0.787 (Note that one could just as well use the PEARSON function). This is a good indication that there is something going on here – the two tasks are likely not independent as originally assumed. Note that the correlation coefficient does not tell us anything about the form of the dependence between A and B; it only tells us that they are dependent and whether the dependence is positive or negative. It is also important to note that there is a difference between quantifying the correlation via the Pearson (or any other) coefficient and developing an understanding of why there is a correlation. The coefficient tells us nothing about the latter.

If A and B are correlated as discussed above, simulations which assume the tasks to be independent will not be correct. In the remainder of this article I’ll discuss how correlations affect overall task durations via a Monte Carlo simulation of the aforementioned example.

Simulating correlated project tasks

There are two considerations when simulating correlated tasks. The first is to characterize the correlation accurately. For the purposes of the present discussion I’ll assume that the correlation is described adequately by a single coefficient as discussed in the previous section. The second issue is to generate correlated completion times that satisfy the individual task duration distributions (Remember that the two tasks A and B have completion times that are described by a triangular distribution with minimum, maximum and most likely times of 2, 4 and 8 days). What we are asking for, in effect, is a way to generate a series of two correlated random numbers, each of which satisfy the triangular distribution.

The best known algorithm to generate correlated sets of random numbers in a way that preserves the individual (input) distributions is due to Iman and Conover. The beauty of the Iman-Conover algorithm is that it takes the uncorrelated data for tasks A and B (simulated separately) as input and induces the desired correlation by simply re-ordering the uncorrelated data. Since the original data is not changed, the distributions for A and B are preserved. Although the idea behind the method is simple, it is technically quite complex. The details of the technique aren’t important – but I offer a partial “hand-waving” explanation in the appendix at the end of this post. Fortunately I didn’t have to implement the Iman-Conover algorithm because someone else has done the hard work: Steve Roxburgh has written a graphical tool to generate sets of correlated random variables using the technique (follow this link to download the software and this one to view a brief tutorial) . I used Roxburgh’s utility to generate sets of random variables for my simulations.

I looked at two cases: the first with no correlation between A and B and the second with a correlation of 0.79 between A and B. Each simulation consisted of 10,000 trials – basically I generated two sets of 10,000 triangularly-distributed random numbers, the first with a correlation coefficient close to zero and the second with a correlation coefficient of 0.79. Figures 2 and 3 depict scatter plots of the durations of A vs. the durations of B (for the same trial) for the uncorrelated and correlated cases. The correlation is pretty clear to see in Figure 3.

To check that the generated trials for A and B do indeed satisfy the triangular distribution, I divided the difference between the minimum and maximum times (for the individual tasks) into 0.5 day intervals and plotted the number of trials that fall into each interval. The resulting histograms are shown in Figure 4. Note that the blue and red bars are frequency plots for the case where A and B are uncorrelated and the green and pink (purple?) bars are for the case where they are correlated.

The histograms for all four cases are very similar, demonstrating that they all follow the specified triangular distribution. Figures 2 through 4 give confidence (but do not prove!) that Roxburgh’s utility works as advertised: i.e. that it generates sets of correlated random numbers in a way that preserves the desired distribution.

Now, to simulate A and B in sequence I simply added the durations of the individual tasks for each trial. I did this twice – once each for the correlated and uncorrelated data sets – which yielded two sets of completion times, varying between 4 days (the theoretical minimum) and 16 days (the theoretical maximum). As before, I plotted a frequency histogram for the uncorrelated and correlated case (see Figure 5). Note that the difference in the heights of the bars has no significance – it is an artefact of having the same number of trials (10,000) in both cases. What is significant is the difference in the spread of the two plots – the correlated case has a greater spread signifying an increased probability of very low and very high completion times compared to the uncorrelated case.

Note that the uncorrelated case resembles a Normal distribution – it is more symmetric than the original triangular distribution. This is a consequence of the Central Limit Theorem which states that the sum of identically distributed, independent (i.e. uncorrelated) random numbers is Normally distributed, regardless of the form of original distribution. The correlated distribution, on the other hand, has retained the shape of the original triangular distribution. This is no surprise: the relatively high correlation coefficient ensures that A and B will behave in a similar fashion and, hence, so will their sum.

Figure 6 is a plot of the cumulative distribution function (CDF) for the uncorrelated and correlated cases. The value of the CDFat any time gives the probability that the overall task will finish within time

.

The cumulative distribution clearly shows the greater spread in the correlated case: for small values of , the correlated distribution is significantly greater than the uncorrelated one; whereas for high values of

, the correlated distribution approaches the limiting value of 1 more slowly than the uncorrelated distribution. Both these factors point to a greater spread in the correlated case. The spread can be quantified by looking at the standard deviation of the two distributions. The standard deviation, often denoted by the small greek letter sigma (

), is given by:

wher is the total number of trials (10000),

is the completion time for the

th trial and

is the average completion time which is given by,

In both (2) and (3) denotes a sum over all trials.

The averages, , for the uncorrelated and correlated cases are virtually identical: 9.32 days and 9.34 days respectively. On the other hand, the standard deviations for the two cases are 1.77 and 2.34 respectively –demonstrating the wider spread in possible completion times for the correlated case. And, of course, a wider spread means greater uncertainty.

So, the simulations tell us that correlations increase uncertainty. Let’s try to understand why this happens. Basically, if tasks are correlated positively, they “track” each other: that is, if one takes a long time so will the other (with the same holding for short durations). The upshot of this is that the overall completion time tends to get “stretched” if the first task takes longer than average whereas it gets “compressed” if the first task finishes earlier than average. Since the net effect of stretching and compressing would balance out, we would expect the mean completion time (or any other measure of central tendency – such as the mode or median) to be relatively unaffected. However, because extremes are amplified, we would expect the spread of the distribution to increase.

Wrap-up

In this post I have highlighted the effect of task correlations on project schedules by comparing the results of simulations for two sequential tasks with and without correlations. The example shows that correlations can increase uncertainty. The mechanism is easy to understand: correlations tend to amplify extreme outcomes, thus increasing the spread in the resulting distribution. The effect of the correlation (compared to the uncorrelated case) can be quantified by comparing the standard deviations of the two cases.

Of course, quantifying correlations using a single number is simplistic – real life correlations have all kinds of complex dependencies. Nevertheless, it is a useful first step because it helps one develop an intuition for what might happen in more complicated cases: in hindsight it is easy to see that (positive) correlations will amplify extremes, but the simple model helped me really see it.

— —

Appendix – more on the Iman-Conover algorithm

Below I offer a hand-waving, half- explanation of how the technique works; those interested in a proper, technical explanation should see this paper by Simon Midenhall.

Before I launch off into my explanation, I’ll need to take a bit of a detour on coefficients of correlation. The title of Iman and Conover’s paper talks about rank correlation which is different from product-moment (or Pearson) correlation discussed in this post. A popular measure of rank correlation is the Spearman coefficient, , which is given by:

where is the rank difference between the duration of A and B on the

th instance of the project. Note that rank is calculated relative to all the other instances of a particular task (A or B). This is best explained through the table below, which shows the ranks for all instances of task A and B from my earlier example (columns 3 and 4).

| duration A (days) | duration B (days) | rank A | rank B | rank difference squared |

| 2.5 | 3 | 1 | 1 | 0 |

| 3 | 3 | 2 | 1 | 1 |

| 7 | 7.5 | 10 | 10 | 0 |

| 6 | 4.5 | 8 | 5 | 9 |

| 5.5 | 3.5 | 7 | 3 | 16 |

| 4.5 | 4.5 | 5 | 5 | 0 |

| 5 | 5.5 | 6 | 9 | 9 |

| 4 | 4.5 | 4 | 5 | 1 |

| 6 | 5 | 8 | 8 | 0 |

| 3 | 3.5 | 2 | 3 | 1 |

Note that ties cause the subsequent number to be skipped.

The last column lists the rank differences, . The above can be used to calculate

, which works out to 0.776 – which is quite close to the Pearson coefficient calculated earlier (0.787). In practical terms, the Spearman coefficient is often considered to be an approximation to the Pearson coefficient.

With that background about the rank correlation, we can now move on to a brief discussion of the Iman-Conover algorithm.

In essence, the Iman-Conover method relies on reordering the set of to-be-correlated variables to have the same rank order as a reference distribution which has the desired correlation. To paraphrase from Midenhall’s paper (my two cents in italics):

Given two samples of n values from known distributions X and Y (the triangular distributions for A and B in this case) and a desired correlation between them (of 0.78), first determine a sample from a reference distribution that has exactly the desired linear correlation (of 0.78). Then re-order the samples from X and Y to have the same rank order as the reference distribution. The output will be a sample with the correct (individual, triangular) distributions and with rank correlation coefficient equal to that of the reference distribution…. Since linear (Pearson) correlation and rank correlation are typically close, the output has approximately the desired correlation structure…

The idea is beautifully simple, but a problem remains. How does one calculate the required reference distribution? Unfortunately, this is a fairly technical affair for which I could not find a simple explanation – those interested in a proper, technical discussion of the technique should see Chapter 4 of Midenhall’s paper or the original paper by Iman and Conover.

For completeness I should note that some folks have criticised the use of the Iman-Conover algorithm on the grounds that it generates rank correlated random variables instead of Pearson correlated ones. This is a minor technicality which does not impact the main conclusion of this post: i.e. that correlations increase uncertainty.

[…] this post for a Monte Carlo simulation of correlated project […]

LikeLike

An introduction to Monte Carlo simulation of project tasks « Eight to Late

December 15, 2009 at 7:07 am

[…] one can model such duration dependencies through statistical correlation coefficients. In my previous post, I showed – via Monte Carlo simulations – that the uncertainty in the duration of a project […]

LikeLike

When more knowledge means more uncertainty – a task correlation paradox and its resolution « Eight to Late

December 17, 2009 at 6:32 am

[…] way to do this is to introduce a non-zero correlation coefficient between tasks as I have done here. A simpler and more realistic approach is to introduce conditional inter-task dependencies As an […]

LikeLike

A gentle introduction to Monte Carlo simulation for project managers | Eight to Late

March 27, 2018 at 4:11 pm